Deriv Case study: System design

PRESENTATION AT A GLANCE

Here’s the summarized presentation of the solution design.

You can view the presentation by following this link for a full page view.

**You can hover at the bottom to have navigation & control panel appear.

ASSUMPTIONS

- While exploring Deriv’s blog(https://tech.deriv.com/), technical Environment, public statements, etc, came to know that Deriv ‘strive continually for multi-Cloud parity with AWS, GCP, Alicloud, and Openstack‘. Hence while designing the solution, I’ve considered using AWS components, as it seems Deriv has adopted AWS. While a similar design can easily be replicated for an on-prem deployment, I’ve used respective AWS services as design elements for convenience & to keep the design in line with real-world use cases.

- While exploring Deriv’s API Explorer, I’ve found that it has also adopted WebSockets (https://api.deriv.com/docs/resources/api-guide#websockets). While designing the solution, I’ve also taken this into consideration.

- Assuming All 40 Consumers will be receiving the SAME Market Data. While in this design, I had kept a provision for accommodating different payloads for different components (through separate Kafka Topic or AWS SNS Topic or AWS EventBridge’s Event Rule), I didn’t discuss at length about different payloads for different Consumers, for simplicity.

- Market Data can be of different types/formats. Either in a file(In that case, AWS services like AWS DataSync may come into the scene), may be stored in a database(Relational or In-memory), Or a JSON file i.e. Textual format. For this design, assumed that data will be available in JSON or similar application readable format.

- While exploring API documentation, Market Data doesn’t seem to be that big payload in size. Hence market data size is assumed to be not more than 256KB of data.

- While a financial system might require Real-Time Data Analysis, For this particular Case Study Scope, No data analysis or processing(On-transit) scope is considered.

FUNCTIONAL REQUIREMENTS

- Need to develop a Market Data Component for an existing Financial System.Assuming the existing Financial System is decoupled though a Microservice Architecture.

- Need to develop a Real-time Streaming Data Pipeline Market Data Component for a Geo-distributed consumers

- Need to develop a Data Producer Component to Ingest Market Data from a Central Server.

- Need to develop a Data Consumer Component to consume Market Data from the data pipeline.

This component will be distributed in different geo location(40 Different Server).

NON-FUNCTIONAL REQUIREMENTS

- Need to have a Failover Capability in case of a disaster.

- Data pipeline should have a simultaneous & reliable parallel communication channel.

Key Components

Now, looking at the requirement, At first, let’s try to figure out the Key Actors or the Key Components to be designed here.

- DATA PRODUCER: Here Central Server is the Data Producer. As assumed, Central Server will have the Market Data in a readable format. However, if the market data is in a file format, in that case, AWS DataSync service can be used for faster & convenient file transfer across different Geo Locations. In case the data is stored in a Database, A lambda function can be written to connect the database & read the market data, then send it to the Data Pipeline.

- DATA CONSUMER: 40 different Data Consumers will have an Endpoint where the Market Data will be Pushed. Considering the big number of consumers, it’ll be difficult to manage at Consumer-side as there are 40 of them & distributed across the globe. So while designing the solution, Data Consumer should have minimal impact & should have the widely supported framework or protocol in use.

- DATA PIPELINE: This is the main component of this Case Study. One single Data Consumer will broadcast to 40 different Data Consumers. This is a perfect scenario to introduce a Pub-Sub architecture. Now, as all 40 consumers will get the same Market Data, this data will fan out i.e. copied to all these 40 consumers.

- REAL-TIME STREAMING DATA: All 40 consumers will have the data same time i.e. simultaneously while they are located in different geo-locations. Also, this market data is a continuous stream with real-time updates. For real-time data streaming, the communication path needs to avoid overhead. For example, TCP with its 3-way handshake might be a big overhead added to this design. While protocols like WebSocket can reduce latency.

- GEO-DISTRIBUTED DATA CONSUMER: For this geo-distributed data pipeline, Network needs to be reliable. Since the central server is the single source of truth while all the data consumers are distributed across the globe, we need to rely upon a reliable backbone network. For example, AWS has a gigantic backbone network through which all there hundreds of datacenter across the globe are connected. And the good thing is AWS lets you pass your network traffic through this reliable & fast network backbone with a reasonable fee using AWS Global Accelerator. In this design, I am going to use that to leverage AWS’s Backbone Network.

- FAILOVER CAPABILITY: As per Murphy’s Law, ‘Anything that can go wrong will go wrong’. 😀 As system architects we can only hope for the best But prepare for the Worst. 🙂 For Failover protection, we need to implement a DR policy. But the critical question is how much redundancy is to be ensured. Because this can get costly. Hence, I have considered a reasonable contingency plan while designing this component. But this can surely be extended if this is mission-critical transaction data.

Communication Protocol

Let’s begin with the Communication Protocol to be used in this solution. The goal is to achieve a real-time data streaming pipeline. Below are the communication channel considered for this pipeline.

TCP with Custom Protocol:

We can try TCP with some custom protocol for this case to support higher communication reliability. But this is not a universal proposition. Since we would have to consider Northbound Interface Support, It’d make sense to use some universally adopted protocol. Also, TCP would include additional communication overhead.

HTTP Polling or HTTP Long-Polling:

We can try either HTTP Polling or Long Polling approach for this communication. It is a widely accepted protocol so Northbound Support is no longer an issue. But in this case, the client(Market Data Consumer servers) will periodically invoke a GET API to fetch the Market Data. While this design is valid but not so efficient since there’s a continuous pull request from 40 different servers & it also adds communication overhead.

WebSocket:

WebSocket can be a great option for this case. While being a bi-directional protocol, WebSocket significantly reduces HTTP Overhead as they continuously transfer messages once the connection is established, bypassing TCP’s 3-way handshaking.

While studying Deriv’s API Documentation, I found that Deriv has WebSocket implementation which also persuaded me a big time to use WebSocket for this case study as well.

https://api.deriv.com/docs/resources/api-guide#websockets

Server-Sent Events (SSE):

While WebSocket is a great option, It is worth mentioning another Great Fit for this case, Server-Sent Events(SSE).

SSE is similar to WebSocket but while WebSocket is bidirectional, SSE is uni-directional, sending events from the server(Central Server where the Market Data is stored) to clients(40 different consumers of the market data).

SSE can be easily implemented on the server side using Event Sourcing APIs.

gRPC with ProtoBuf:

gRPC could have been another option to implement here. With gRPC, ProtoBuf can be used instead of JSON for faster message encoding & decoding. But this may add additional complexity to the system in my opinion & can be an overkill. Also, Language support is a challenge for ProtoBuf.

Finally, after much consideration, I've decided to go with WebSocket for this component design. Since this will be a very suitable protocol for real-time communication without much of the TCP overhead & widely supported as well. Also, its bi-directional nature may come in handy if we want to implement an additional layer of reliability matrix on data delivery.

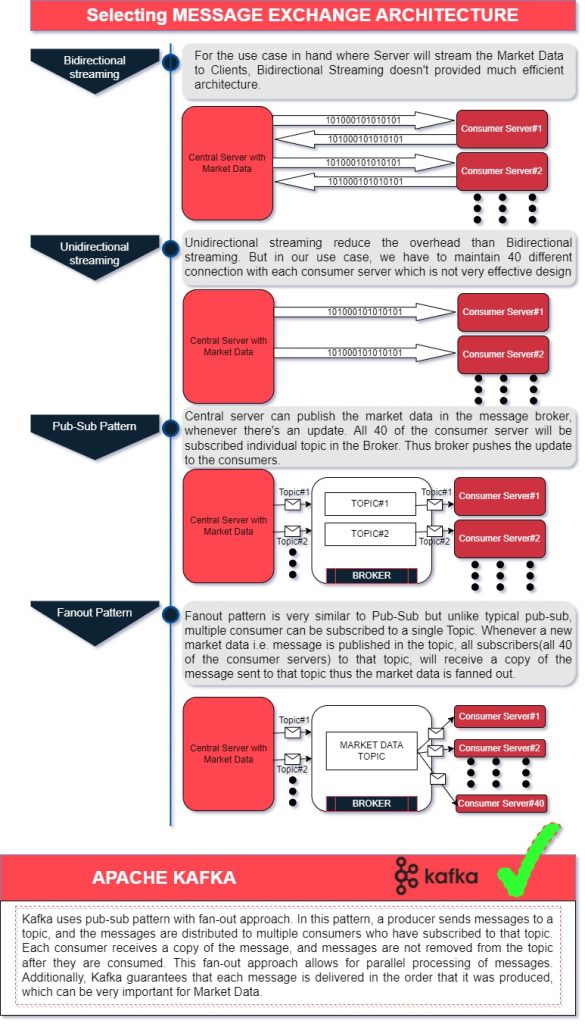

Message Exchange Architecture

While the communication protocol is now selected, let’s analyze what the Message Exchange Architecture can look like. For this, I’ve considered below options:

Bidirectional streaming:

For the use case in hand where Server will stream the Market Data to Clients, Bidirectional Streaming doesn’t provide much efficient architecture.

Unidirectional streaming:

Unidirectional streaming reduces the overhead more than Bidirectional streaming. But in our use case, still we have to maintain 40 different connections with each consumer server which is not a very effective design

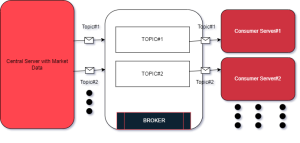

Pub-Sub Pattern:

The central server can publish the market data in the message broker, whenever there’s an update. All 40 of the consumer server will be subscribed to individual topics in the Broker. Thus broker pushes the update to the consumers.

Fanout Pattern:

Fanout pattern is very similar to Pub-Sub but unlike typical pub-sub, multiple consumers can be subscribed to a single Topic. Whenever a new market data i.e. message is published in the topic, all subscribers(all 40 of the consumer servers) to that topic, will receive a copy of the message sent to that topic thus the market data is fanned out.

Hence after some consideration Fanout Pattern is the winner in my book. Being implemented such pattern, Kafka become a primary candidate for the message broker in my solution design.

|| APACHE KAFKA ||

Kafka uses a pub-sub pattern with a fan-out approach. In this pattern, a producer sends messages to a topic, and the messages are distributed to multiple consumers who have subscribed to that topic. Each consumer receives a copy of the message, and messages are not removed from the topic after they are consumed. This fan-out approach allows for the parallel processing of messages. Additionally, Kafka guarantees that each message is delivered in the order that it was produced, which can be very important for Market Data.

Hence, I’ve decided to try Kafka in this solution design. Although some other services were considered as alternate solutions as well.

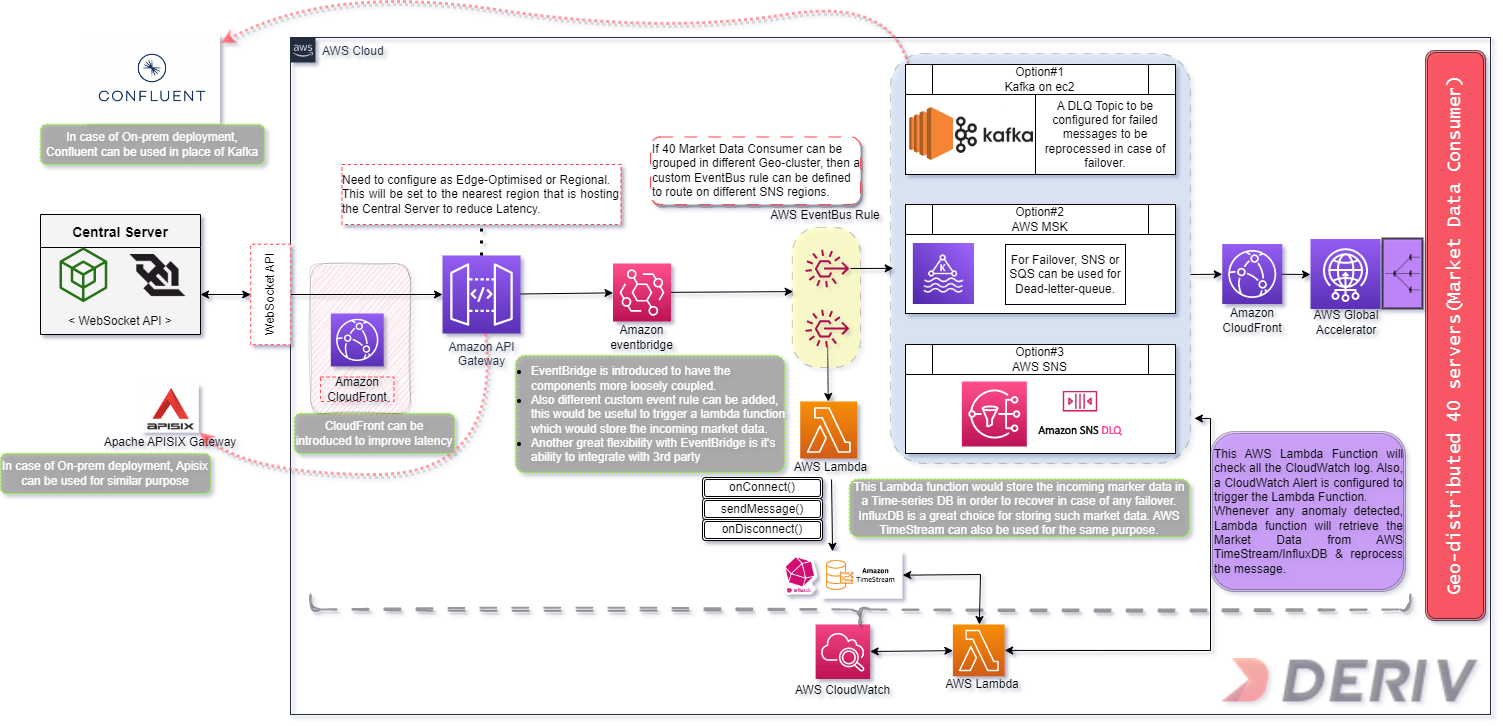

High Level Architecture

Let’s begin the design from the source . . . The Central Server where the Market Data is located.

Now, previously we decided on using WebSocket protocol and also assumed that Deriv’s financial system is privy to WebSocket. So, this central server exposes the Market Data in a WebSocket API. This WebSocket API will be exposed to an API Gateway. If we go for On-prem private cloud deployment, then we can use Apache APISIX Gateway as the API Gateway. However, since the component is meant to use on a global scale, spanning across different geo-location, it doesn’t make much sense to go for an on-prem setup, unless there’s any specific use case. However, For this system design, I’d recommend going with AWS’s API Gateway.

While configuring the AWS API Gateway, Need to configure it as Edge-Optimized or Regional scope. This will be set to the nearest region that is hosting the Central Server to reduce latency on Market Data Ingest. AWS CloudFront can be introduced here as well, to reduce latency further.

Now, after much thought, I’ve decided to introduce AWS EventBridge to this system design. AWS API Gateway will pass the data stream to AWS EventBridge in the next hop. Reasons for using EventBridge are as follows:

- EventBridge is introduced to have the components more loosely coupled.

- Also, different custom event rules can be added, this would be useful to trigger a lambda function which would store the incoming market data.

- Another great flexibility with EventBridge is its ability to integrate with 3rd parties. If needed EventBridge can connect to other services outside AWS.

EventBridge also has an interesting capability to add custom EventBus Rule. For the next hop in my system design, one of the options is AWS SNS. Now, If the destinations (40 Market Data Consumers) can be grouped in different Geo-cluster, then a custom EventBus rule can be defined to route on different SNS regions. This could contribute to reducing latency as well.

Another EventBus Rule can be defined to pass the data to a custom AWS Lambda function. The purpose of this Lambda function is to keep track of all the messages passed through this data pipeline, in a permanent storage. This Lambda function would store the incoming marker data in a Time-series DB to recover in case of any failover.

InfluxDB is a great choice for storing such market data. AWS TimeStream can also be used for the same purpose.

Since we are using WebSocket APIs in AWS API Gateway, we can write our custom code at onConnect(), sendMessage() & onDisconnect() to store & track the market data passed through this Data Pipeline. This would be handy when we set up the monitoring for this system which I’ll explain a bit later. Kindly bear with me till then. 🙂

Now finally, to broadcast the market data to all 40 of the destination nodes, I’ve considered the below 3 options using AWS Services. However, If we consider a similar on-prem option to implement a similar system design, we can use Confluent, this is based on Kafka but they expanded on core Kafka capabilities. They have good support on Multi-geo replication for Kafka which can some very handy in our use case. Now coming back to AWS Services, we have 3 options:

Kafka on ec2:

For option#1, We can consider deploying a Multi-geo replicated Kafka cluster(More on that in the Failover section below) which will be deployed in different geo-location. A DLQ(Dead-Letter-Queue) Topic is to be configured for failed messages to be reprocessed in case of failover. While this option gives us more flexibility & control over the message broker, this also introduced an operational management overhead.

AWS MSK:

Another option can be using AWS’s Managed Streaming for Apache Kafka and AWS MSK. MSK can securely stream data with a fully managed, highly available Apache Kafka service. For Failover, SNS or SQS can also be used for Dead-Letter-Queue.

AWS SNS:

Another great option here can be using AWS SNS. This can act as a single message bus that will deliver the market data to all 40 of the destination nodes. This is a fast & highly fault-tolerant service that can deliver with minimal latency & reliably. For failover, AWS SNS DLQ can be configured.

Last Mile Delivery:

Any of these 3 options mentioned above can be used for message broker service. Now, from either of these options, the next hop will be the last-mile Delivery.

Last-mile delivery of this component can be very tricky since all 40 of these consumers are geographically distributed across the globe. One of the biggest reasons to choose AWS is that it has great global support. AWS has a strong backbone private network which has great network latency & AWS offer to use their backbone for your data to stream through their backbone network for a reasonable charge. In my design, I’ve suggested using AWS Global Accelerator along with AWS CloudFront to leverage the best latency while using AWS’s backbone network structure so that our Market Data will stream through the AWS Backbone for last-mile delivery at 40 different consumer servers.

Monitoring:

For monitoring, first, we’ll enable AWS CloudWatch to capture all the logs of all the AWS services used designing in this component. We’ll introduce another Lambda function for monitoring. This AWS Lambda Function will check all the CloudWatch logs. Also, CloudWatch Alert will be configured to trigger the Lambda Function, in case any anomaly is detected. Both of these components will keep a close eye on the whole component. Whenever any anomaly is detected, the Lambda function will retrieve the Market Data from AWS TimeStream/InfluxDB & reprocess the message. I’ve already mentioned this earlier. The other Lambda function was deployed to keep track of the incoming market data stream & store it in a Time-Series DB. Now this monitoring lambda can leverage this incoming Market Data Stream stored in the TimeSeries DB for faster reprocessing. We can extend this Lambda function to re-submit the failed messages to the message broker topic for reprocessing.

Failover Plan

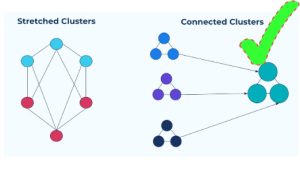

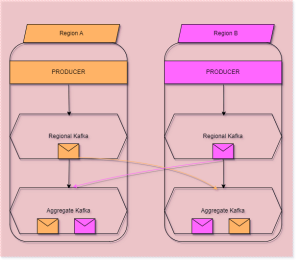

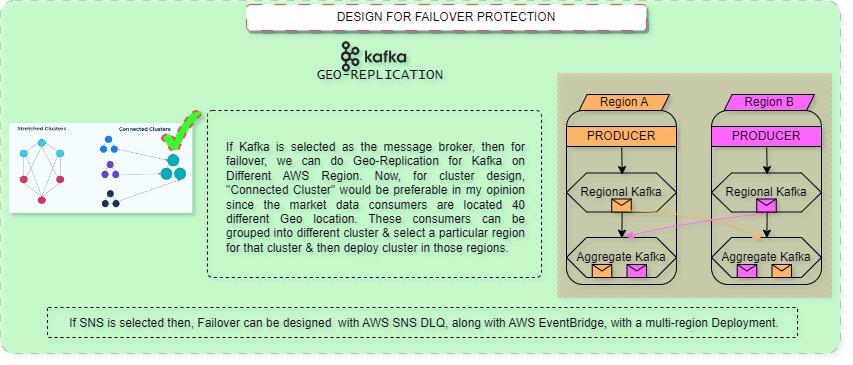

If Kafka is selected as the message broker, then for failover, we can do Geo-Replication for Kafka on Different AWS Region. Now, for cluster design, “Connected Cluster” would be preferable in my opinion since the market data consumers are located 40 different Geo location. These consumers can be grouped into different cluster & select a particular region for that cluster & then deploy cluster in those regions.

If SNS is selected then, Failover can be designed with AWS SNS DLQ, along with AWS EventBridge, with a multi-region Deployment.

Summary

All in all, I want conclude by thanking you to give me an opportunity work on this very interesting case study. I thoroughly enjoyed the journey.

I believe that in System Design, there’s no absolute perfect design. All depends on specific use case & scenario. While I have suggested this system design for the component, I’m open for suggestion on any steps while we can design that part in a different angel. 🙂

Footnote on Requirements:

- All the 40 different servers should receive the market data simultaneously yet reliably

Tamim: Refer to High Level Architecture Segment.

- Produce a high-level architecture with all the components that you consider are required to implement your solution

Tamim: Refer to High Level Architecture Segment.

- Also, how will you develop failover capabilities in case of a disaster

Tamim: Refer to Failover Segment.

- Document the tradeoffs between components and algorithms, and explain your choices

Tamim: Refer to Communication Protocol, Message Exchange Protocols & High Level Architecture Segment.

- Document alternate solution(s) that you considered

Tamim: Refer to Communication Protocol, Message Exchange Protocols & High Level Architecture Segment.

- Document why you went with your approach

Tamim: Refer to Communication Protocol, Message Exchange Protocols & High Level Architecture Segment.

- It’s perfectly valid to make some assumptions, but document those assumptions

Tamim: Refer to Assumptions Segment.